Quality data organization, documentation, and storage are essential for research data to be understood, used, and reapplied both by the researchers themselves and other scientists in the long term. Without appropriate information about what the data is, how it was obtained, how it is structured, and how it should be interpreted, data loses its value.

Effective data management includes three interconnected components:

- organization – structured and logical arrangement of data and files during the research project

- documentation – detailed information about data content, origin, and use

- storage – secure data storage both during the project and long-term after its completion

These processes must be planned from the project's beginning and maintained throughout the research cycle to ensure data compliance with FAIR principles.

Structured data organization is the foundation of research efficiency. Well-organized data facilitates daily work, reduces error risk, and ensures that information remains accessible and understandable both during the project and in the future. Data organization includes creating logical folder structures, consistent file naming systems, and appropriate format selection.

Effective organization begins with thoughtful planning at the project's start and continues with a disciplined approach throughout the research process. This allows avoiding chaos that occurs when data accumulates without a clear system and ensures that research results are reproducible and verifiable.

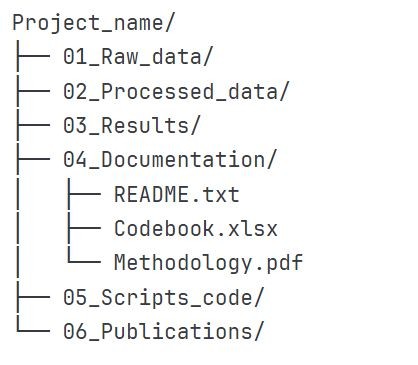

Folder and file structure

When starting to collect data, it's important to create a logical folder structure. Structure folders hierarchically with a limited number of levels (maximum 3-4):

Data format selection

The correct file format is essential for data to be long-term accessible, readable, and analysable. Choose open and widely used formats, for example:

- .csv – for tabular data (universal, opens in any program)

- .txt – for simple text (UTF-8 encoding)

- .xml or .json – for structured data

- .tiff – for images (without quality loss)

- .pdf/a – for documents (long-term storage)

- .r / .py (scripts, if using R or Python)

Avoid proprietary or specific formats that may lose support in the future, for example:

- .xls (old Microsoft Excel version) → use .csv

- .sav (SPSS) → export to .csv

- .docx (Microsoft Word) → choose .pdf or .txt

Important: Ensure that data is without access encryption or passwords if intended for public sharing!

Data documentation is a process that makes research data understandable and usable for both the author and other researchers. Without quality documentation, even the most valuable data can lose its significance over time, as information about its origin, structure, and interpretation is not preserved.

Documentation includes metadata creation, detailed data descriptions, and preparation of explanatory materials. This process should begin parallel to data collection and continue throughout the project, using standardized metadata schemas and controlled vocabularies to ensure data compliance with FAIR principles.

What are metadata?

Metadata is structured information about a specific published information unit (publication or dataset). Metadata is used to create machine-readable and human-readable records about information units in information systems, ensuring their discovery, linking on the web, use, management, and helping to reference (cite) them. Quality metadata makes data findable and reusable according to FAIR principles.

Creating data documentation files

It's recommended to create a data documentation file (for example, ReadMe.txt) where the following is described in detail:

- data acquisition process: methods, instruments, time and place, as well as possible limitations

- data processing steps: cleaning, transformation, calculations, and quality control

- file and variable structure: purpose, format, and interrelationships of each file

- terminology and abbreviations: explanation of all specific terms

- data interpretation: how to understand specific values, units of measurement, and possible errors

Describe all variables (columns/tables) with the following information:

- variable name and description

- data type (text, number, date)

- possible values or range

- designation of missing data

- units of measurement and precision

If using questionnaires or interviews, include the question list or scenario as an appendix. Specify used software versions or analysis scripts if they are necessary for data interpretation. Also document all changes in data and their reasons.

ReadMe file templates: The Latvian Data Stewards Network has prepared ReadMe file templates to facilitate dataset documentation: download template (.zip).

Metadata standards

Use standardized metadata schemas to ensure consistent and internationally recognizable documentation. The most common general metadata standards for research data are Dublin Core, DataCite Metadata Schema, Data Documentation Initiative (DDI) for social science data description.

For research data description, it's preferable to use field-specific metadata standards that provide deeper and more precise data characterization. If these are not available, use multidisciplinary standards that are widely recognized in the academic community.

Importance of controlled vocabularies

A controlled vocabulary is a standardized list of terms that ensures consistent use of keywords and categories. This significantly improves data findability and interoperability between different systems, as researchers use the same terms for identical concepts. Controlled vocabularies also reduce ambiguity risk and facilitate international collaboration.

Most popular international controlled vocabularies:

- Wikidata: universal, multilingual knowledge base with standardized identifiers for people, places, concepts, and objects

- LCSH (Library of Congress Subject Headings): widely used in library science and humanities for subject classification

- MeSH (Medical Subject Headings): standard terminology for medicine and life sciences, maintained by the US National Library of Medicine

- AGROVOC: multilingual vocabulary developed by the UN Food and Agriculture Organization for agricultural, forestry, and food science terms

- Getty Thesauri: collection of art, architecture, and cultural heritage terms with hierarchical relationships

When choosing a controlled vocabulary, consider your research field, target audience, and repository requirements. Many international repositories already integrate the most popular vocabularies, facilitating their use. Combine general and field-specific vocabularies to ensure both broad findability and precise categorization.

Practical recommendations

- Start documentation in early research stages – don't postpone it

- Use descriptive file names with version indications

- Regularly update documentation parallel to data processing

- Consult with UL data stewards about metadata standards

- Test documentation with colleagues to ensure its comprehensibility

Important: Quality data documentation is an investment in the long-term value of research and scientific community development.

Secure and sustainable data storage is critical for ensuring research integrity. Data storage strategy covers both active work during the project and long-term archiving after its completion. Proper storage approach protects against data loss, ensures compliance with security requirements, and guarantees that research results remain available for verification and further use.

Effective storage requires both technical solution selection and access rights management, as well as clear responsibility distribution among research project participants. This also includes data lifecycle planning – from active use to final archiving or deletion.

Storage during the project

During the study, use UL centrally managed IT systems and services that provide the necessary security level and automatic backup to protect research data from loss or unauthorized access:

- Permitted solutions: For research data management during the study, primarily use Microsoft 365 services – Microsoft Teams and SharePoint for collaborative work, which allow organizing team collaboration and project data management, as well as UL-provided servers.

- Limitations: OneDrive is intended for personal use and is not suitable for research project data storage. When a researcher leaves UL, their OneDrive account content becomes inaccessible, which can cause research data loss.

- Prohibitions: Research data should not be stored on unmanaged or external devices. This means data should not be located on local hard drives, USB drives, or cloud solutions without appropriate encryption and backup. We also recommend not using private cloud accounts, such as Google Drive or Dropbox accounts.

Access rights organization and collaboration during the project

Data access organization should be based on the principle of minimal necessity. Each person in the project should be granted only the level of access necessary to perform their specific tasks. People involved in the project may receive different access levels according to their roles and responsibilities.

Collaboration with external partners should be conducted through UL-recognized channels. Microsoft Teams allows secure collaboration with other institutions by creating controlled collaboration spaces with appropriate security settings. For large file sending, the store.lu.lv tool is available, which allows sending large files with validity periods up to 20 days.

Backup and data maintenance during the project

UL-managed Microsoft 365 systems automatically provide regular backup and technical maintenance, but researchers must take responsibility for their data organization and quality. This includes deleting temporary files, removing outdated versions, and maintaining data structure in a way that necessary information is easily findable.

Long-term storage and archiving

- Data evaluation at project end: At the research completion stage, each dataset needs to be evaluated individually to determine further action. Some data will need to be preserved long-term, others may be suitable for public access, but a third part can be deleted. When evaluating data, consider its connection to publications, potential for reuse, and possible significance for future research.

- Data preparation for archiving: Preparing datasets for long-term storage requires careful planning and a structured approach regardless of whether they will be publicly available or stored with restricted access. Datasets should be organized in a clear manner and documented. It's preferable to use open and widely used data formats to ensure data access in the future.

Metadata and documentation requirements: Quality metadata is essential for data to be findable and understandable in the future. Metadata should include information about data origin, collection method, processing steps, and tools used. Information about data structure, variable meanings, and possible limitations is also important.

Documentation should include enough information for an independent researcher to understand and use the data. This includes methodology descriptions, codebooks, calibration information, and any other materials necessary for data interpretation. Informed consent forms and other legal documents should be stored together with the data.

Transfer of responsibility

If the researcher responsible for data leaves UL, the transfer of data management responsibility to colleagues must be ensured. This includes transferring access rights, handing over documentation, and appointing a new responsible person. This process should be planned already during the DMP development stage.

CONFERENCE

CONFERENCE